All morning the automated driving technology world has been buzzing about what CNBC now confirms is a Tesla acqui-hire of the embedded computer vision-focused startup DeepScale. The buzz started when DeepScale CEO Forrest Iandola changed his LinkedIn employer status to Tesla yesterday evening, and posted a message stating

I joined the Tesla hashtag#Autopilot team this week. I am looking forward to working with some of the brightest minds in hashtag#deeplearning and hashtag#autonomousdriving.

Forrest Iandola

At least 10 former DeepScale engineers and researchers also switched their employer status from the startup to Tesla over the last 48 hours, proving that if Tesla hadn’t bought the startup it must have gutted its staff. Tesla has not yet confirmed the acqui-hire, and did not immediately respond to a request for comment.

DeepScale comes out of a UC Berkeley research group called “Berkeley Deep Drive,” where co-founders Iandola and Kurt Keutzer worked on improving the efficiency of deep neural nets (DNNs) for computer vision. As Junko Yoshida explains in this helpful backgrounder on the company, progress in computer vision between 2012 and 2016 was largely based on increasing the resources used to run DNNs, leading Iandola and Keutzer to pursue simplified networks that could perform accurately and with low latency using the limited resources found in embedded systems. The result was a DNN called SqueezeNet, which could recognize images on the ImageNet training library with accuracy comparable to a top-performing (as of 2012) but very large DNN called AlexNet [PDF here] while taking up less than half a megabyte (about 50 times smaller).

Iandola’s research with Berkeley Deep Drive was funded by several automotive companies, the DeepScale founder told AI Time Journal in an interview earlier this year, including Ford, Bosch and Samsung. These companies told Iandola that their autonomous drive development programs were hampered by AI systems that required a trunk full of servers, and that “they wanted to find a path to lower costs and produce vehicles with AI that would be affordable and profitable.” Iandola explicitly presents DeepScale as a competitor to the biggest player in automotive computer vision, Mobileye, which provided the foundation for Tesla’s first iteration of Autopilot before the relationship blew up in the wake of the fatal Josh Brown crash. Here’s how he characterized DeepScale’s competitive advantage:

With Mobileye, you really have to buy the complete solution. If you look at how they’ve developed their product, you have to buy their camera, their processor, and their software all in one bundle. If you only use a small piece of that technology or you want to use it in different way, it’s not really set up for that. That was the only way to sell solutions into the automotive market when Mobileye came on the scene about 20 years ago. We see the automotive value chain shifting to more open platforms that accept and desire solutions from 3rd party suppliers that enable OEMs and tier-1s to differentiate themselves.

One of the unique offerings of DeepScale’s solutions is that it provides automotive customers with options that they haven’t had in the past. Customers can use our technology as the spearhead to their perception stack, or they can peel off modules from our product portfolio to complement the rest of their perception solution.

Forrest Iandola

Squeezenet eventually gave rise to a variety of other DNNs for other applications, including SqueezeDet for object detection and SqueezeSeg for semantic segmentation of lidar data, as well as an even simpler image classification network that can be optimized for mobile and embedded hardware called SqueezeNext. This research culminated in SqueezeNAS, which leverages neural architecture search technology to actually automate the development of DNNs and by last year this was developing networks that were more accurate and lower-latency than previous, manually-designed networks. Once again, the DeepScale researchers’ focus on efficiency seems to have paid off, as it found that a “supernetwork” approach like SqueezeNAS can cut the amount of GPU time needed to train and search for a DNN that is optimized not only for the inference hardware but also the task (in their example, a semantic segmentation task) from a $70,000 cloud computing bill to just $700 (based on Amazon Web Services prices).

In January of this year DeepScale announced its first product, a modular deep learning perception software portfolio for driver assistance called Carver21. Emphasizing the modularity and efficiency of its software, the startup targeted automotive OEMs and Tier One suppliers, arguing that Carver21 could be integrated with any sensors and processors a customer wanted, to deliver the driver assistance features they desired. With three DNNs running in parallel on an NVIDIA Drive AGX Xavier processor, DeepScale claimed that Carver21 could deliver perception for “features like highway automated driving and self-parking that would be found in what’s considered an L2+ automated vehicle” using just 2% of Xavier’s processing power.

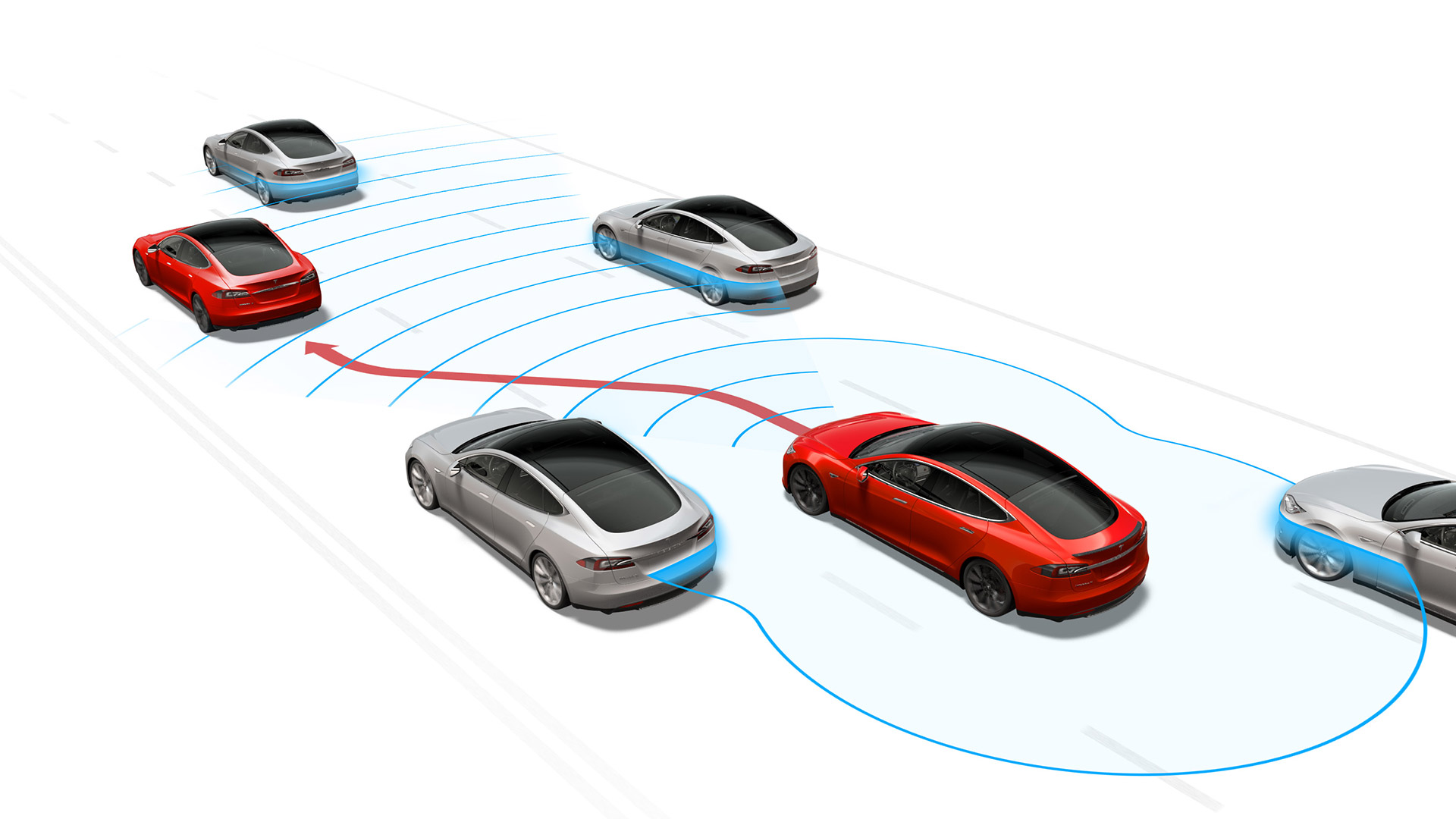

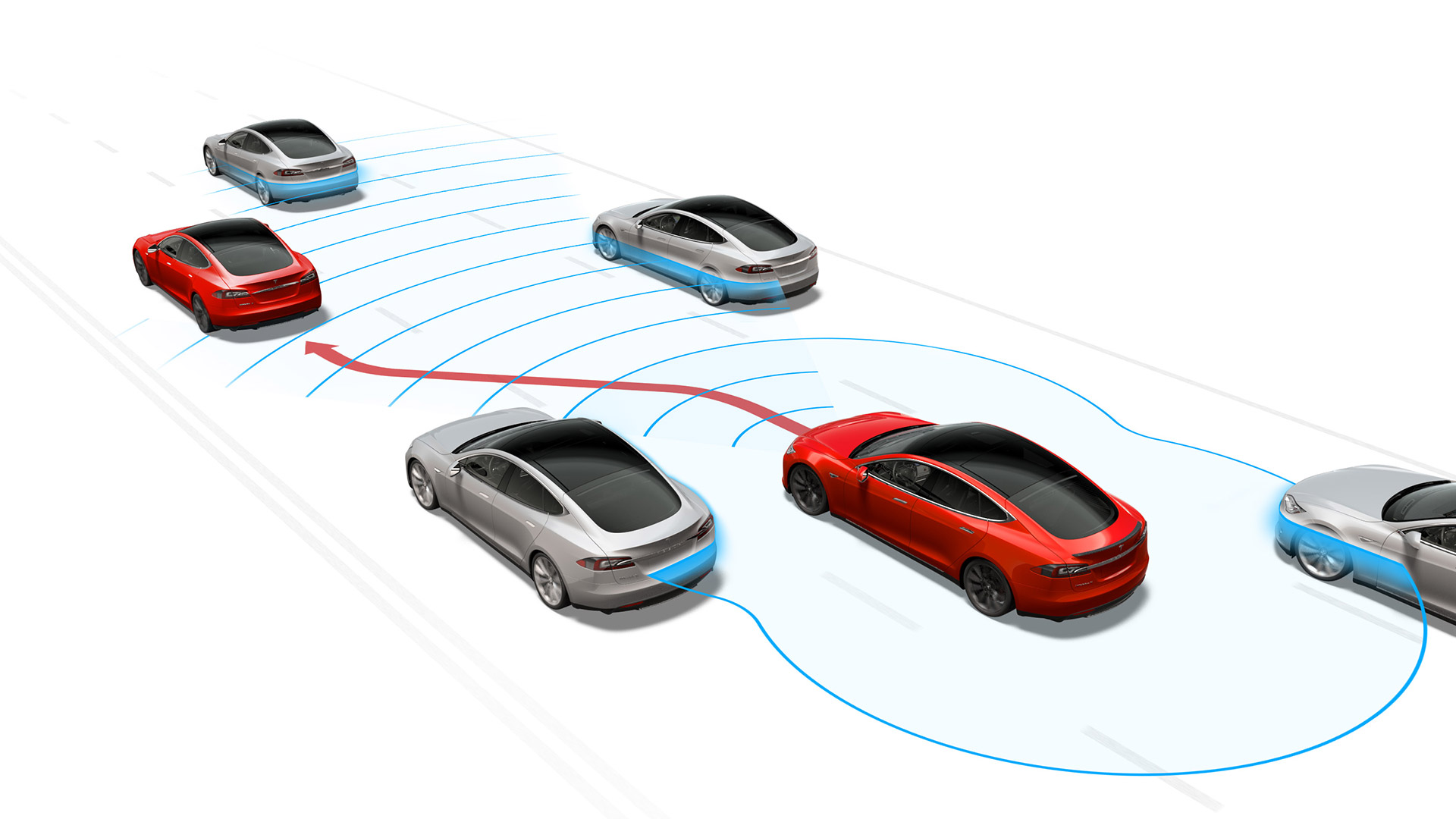

This focus on high-performance, low-resource computer vision for automated driving makes DeepScale an obvious fit for Tesla’s ambitious, vision-centric approach to autonomous drive technology development. Constrained by their need to sell cars at price points that support their goal of half a million units per year of production, Tesla can’t afford to throw the 360 degree camera, radar and lidar coverage and massive compute systems that other autonomous drive stack developers rely on to deliver consistent performance. This means that Tesla has to develop extremely fast, accurate and reliable DNNs for a vision-heavy autonomous drive system that can run on relatively low-power hardware… which is precisely what DeepScale has been focused on.

To achieve this, both firms emphasize the co-development of hardware and software. Though DeepScale doesn’t develop its own hardware, Iandola says it has worked closely with hardware partners and that “those partnerships are pretty tight and we do often to some extent influence each other’s design choices and collaborate on what’s built.” This very much matches Tesla’s approach (not to mention Mobileye’s), which has seen it develop its own inference processing hardware, specific to its in-car DNNs. The development automation brought to bear by DeepScale’s SqueezeNAS supernetwork is also very much in line with Tesla Autopilot boss Andrej Karpathy’s so-called “Software 2.0” paradigm, suggesting the two firms take similar approaches to development. DeepScale could also bring a new testing methodology to Tesla, similar to functional safety assessments but updated for software-defined vehicles, as Iandola hinted at the development of such a system last year.

Perhaps most importantly, Tesla’s acqui-hire of DeepScale reflects the intense competition for talent in the autonomous drive technology space which has already driven Apple to buy Drive.AI and Waymo to buy Anki and will likely to see other major players purchase smaller startups for their talent. The Drive has heard an unconfirmed report that at least one other major player in the autonomous vehicle development space had recently been in talks to acqui-hire DeepScale, with negotiations circling a price approaching nine figures. It’s not clear if those talks broke down or if Tesla outbid the other player, but in today’s market for automated driving AI talent an acqui-hire like DeepScale can’t have been particularly cheap.

Based on the poor performance demonstrated by the just-released “Advanced Summon” feature as well as the relatively basic demonstrations Tesla gave at its “Autonomy Day” event, the electric automaker has a long way to go before it will be ready to deliver the Level 5 “Full Self-Driving” solution that it started collecting customer deposits for in October 2016. Though DeepScale’s talent should shore up its autonomous drive technology team, which has been depleted by waves of turnover in recent years that have reportedly accelerated in recent months, Tesla still faces the toughest goal on the shortest timeline in the sector. They will need every ounce of talent that their new hires can bring to bear, as well as the legendary patience of their customers, as they try to deliver a “general solution” to full autonomy that works in every domain using a limited sensor suite.