Rivian just threw down the hammer and implied Tesla is dead wrong about making self-driving cars with just the use of cameras.

During the automaker’s AI and Autonomy Day in Palo Alto California on Thursday, Rivian unveiled its in-house developed proprietary silicon, a roadmap for its next-generation hands-free driver-assist system, an AI-driven assistant for text messaging capabilities, and the adoption of LiDAR hardware.

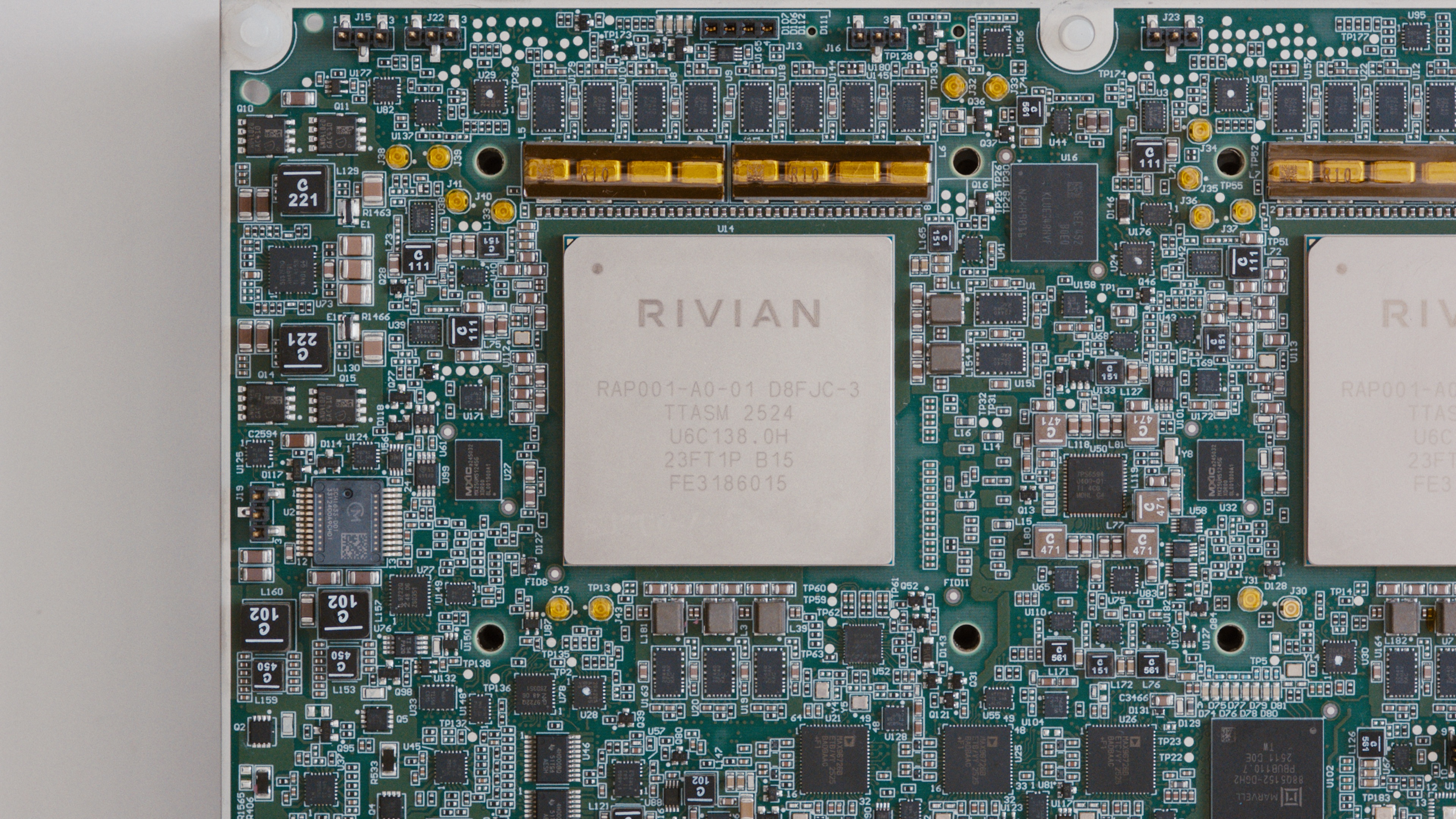

The core of the announcement is Rivian’s new in-house designed silicon chip dubbed Rivian Autonomy Processor (RAP1), which will replace today’s Nvidia-sourced chip. The 5nm chip puts processing and memory onto a single module and is paired with the automaker’s Gen 3 Autonomy Computer (ACM3), which is an evolution of today’s ACM2 that launched in 2025 on the second-generation R1.. While RAP1 will be key to the automaker’s self-driving ambitions, it’s the addition of a lidar hardware that shows that Rivian thinks the competition’s approach is flat out wrong.

Lidar will first appear on the upcoming R2 model at the end of 2026. The automaker would not disclose or discuss when, or if, lidar will be added to the R1, but it’s hard to imagine the more expensive flagship models won’t adopt the hardware soon after launching on the new R2.

Rivian’s newly announced Universal Hands-Free (UHF) hands-free driver-assist system, which launches later this month on second-generation R1 models via a free over-the-air software update dubbed 2025.46, will eventually become more advanced with the addition of lidar. The lidar hardware will enable three-dimensional spatial data and redundant sensing along with improved real-time detection for edge-case driving scenarios that today’s in-house developed RTK GNSS (real-time Kinematic Global Navigation Satellite System) that uses a GPS plus ground station signal to refine the vehicle’s position to within 20 cm of its latitude and longitude, 10 external cameras, 12 ultrasonic sensors, 5 radar units, and a high-precision GPS receiver might miss.

A Rivian spokesperson exclusively told The Drive lidar is necessary to and a camera-only system is not good enough (Tesla’s self-named Full Self Driving system uses only camera and the automaker stripped forward-facing radar units from its cars) because, “Cameras are a passive light sensor, which means in night conditions and fog they don’t perform as well as active light sensors like lidar.”

Lidar can actually double visibility at night. Cameras are great at seeing the world until they can’t,” the spokesperson told The Drive.

Sam Abuelsamid, vice president of market research at Telemetry told The Drive, “Having multiple sensing modes is essential to provide a robust and safe ADS solution. Each of the different sensor types has strengths and weaknesses and they are all complementary. To each other. Cameras are excellent for object classification but they perform poorly in low light or when the sun shines directly into them. Unless they are configured for stereoscopic vision, they are also poor at determining distance to an object.”

“Lidar provides resolution in between cameras and radar, works in all lighting and with modern software can even cope with rain and snow. But until recent years, it has been considerably more expensive. However, in the last few years the cost of solid state lidar has come down substantially and some of the latest units from companies like Hesai are under $200,” Abuelsamid said.

Abuelsamid continued, “When advocates of camera-only solutions talk about humans only driving with two eyes, they are wrong on multiple counts. Humans use multiple senses when they drive, stereoscopic vision for depth perception (which Tesla doesn’t have), hearing and touch, feeling the feedback through their hands and back. Eyes have much higher dynamic range than cameras, making them much more useful in adverse lighting and the human brain also processes information very differently and human perception is much better at filtering extraneous information and classifying what it sees.”

Rivian told The Drive that at launch, lidar-equipped vehicles will have a new augmented reality visualization in the driver display, plus improved detections of objects around the vehicle—particularly further away and with challenging conditions. Rich spatial data from these vehicles will also be used to improve onboard model performance for all second-generation R1 and later vehicles, including those without lidar. Longer term, lidar-equipped vehicles will also gain exclusive autonomy features enabled by the additional sensor and on-board compute.

Both the lidar and ACM3 paired with RAP1 hardware are all currently undergoing validation and Rivian expects to ship these new pieces of hardware on R2 at the end of 2026.

Got a tip about future tech? Send us a note at tips@thedrive.com