The National Transportation Safety Board had some withering criticism for Tesla during a public meeting on Tuesday regarding the 2018 fatal crash of a Model X SUV being operated on Autopilot, claiming the automaker failed to take obvious steps to prevent “foreseeable misuse” of its “completely inadequate” semi-autonomous driving aid and stonewalled officials who asked for its plans on the matter back in 2017.

Chairman Robert Sumwalt also revealed that investigators determined the driver of the crashed Model X, an Apple engineer named Walter Huang, was likely playing a video game with both hands off the steering wheel when his car suddenly veered into an exposed concrete divider on a northern California freeway two years ago. His conclusion? The same thing we’ve been saying for years.

“You cannot buy a self-driving car today,” he said. “You don’t own a self-driving car, so don’t pretend you do… This means that when driving in the supposed ‘self-driving’ mode, you can’t read a book, you can’t watch a movie or TV show, you can’t text and you can’t play video games.”

Specifically, the NTSB castigated Tesla for resisting calls to implement better technology to ensure drivers are actually paying attention while Autopilot is engaged and only able to use it in approved areas where it’s safe to do so—Huang had previously told relatives that his Model X was unable to safely navigate this exact interchange in Mountain View, CA while on Autopilot. Officials say simply allowing the driver to tug on the steering wheel at set intervals isn’t enough, nor is using consumer cars to test the Autopilot system on any given journey down an unfamiliar or unsuitable road.

It also called on smartphone manufacturers and companies that supply phones to their employees to automatically install lockout technologies to prevent the use of distracting apps or functions when the device detects its owner is driving. Lastly, it saved some special interagency criticism for the “misguided” laissez-faire approach regulators at the National Highway Traffic Safety Administration have taken to managing this wild west of early semi-automation in consumer cars.

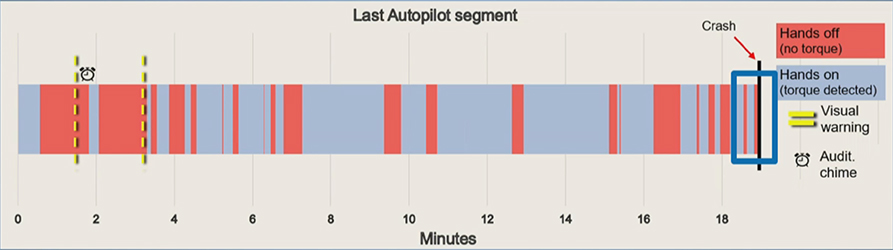

Data collected by investigators indicated that Autopilot was engaged for the last 19 minutes of Huang’s 28.5 minute trip, during which time he regularly did not apply any torque to the steering wheel. Huang received two visual alerts to do so early on, after which point he did keep his hands on the wheel for longer stretches. But he was mostly hands-off in the two minutes leading up to the crash, including the final six seconds before impact.

The NTSB suggested that the lack of interaction with the vehicle’s steering wheel exhibits Huang’s over-reliance on automation, a crutch enabled by Tesla and other manufacturers utilizing Level 2 semi-autonomy outside of the SAE’s defining guidelines.

While Tesla does define a set of constraints, called an Operational Design Domain (ODD), to indicate the conditions in which Autopilot is intended for use, it does not prevent a driver from engaging Autopilot outside of those limitations. Furthermore, the NTSB indicated that Tesla told its investigators that ODD limitations were not acceptable for Autopilot given that the system is classified as a Level 2 driver assistance system, and that it is the responsibility of the driver to assess acceptable operating environment.

In fact, the NTSB had made recommendations following a 2017 crash involving Tesla’s Autopilot which called on manufacturers to further tighten ODD limitations and develop better driver monitoring systems. The NTSB says that five out of the six manufacturers it sent the recommendations to responded to the inquiry – Tesla did not. CEO Elon Musk did, however, defend Tesla’s position to reject eye tracking as a driver monitoring system for being “ineffective” in a 2018 tweet.

The NTSB echoed its findings from 2017 during the meeting: “Since the driver may interact with the steering wheel without visually assessing the environment, monitoring of steering wheel torque is a poor surrogate measure of driver engagement with the driving task.”

What happens in the wake of today’s meeting is unclear—the NTSB is an independent federal agency that can only make policy recommendations, not actual, enforceable rules. Its public upbraiding of the NHTSA shows that the federal government isn’t exactly moving in lockstep on how to deal with this emerging technology, and its continued inability to force Tesla to cooperate doesn’t give a lot of confidence that regulators are equipped to handle outright obstinacy.

We reached out to Tesla for comment, and we’ll be sure to update if we hear back. At the time of the public hearing, CEO Elon Musk was tweeting about ice cream.