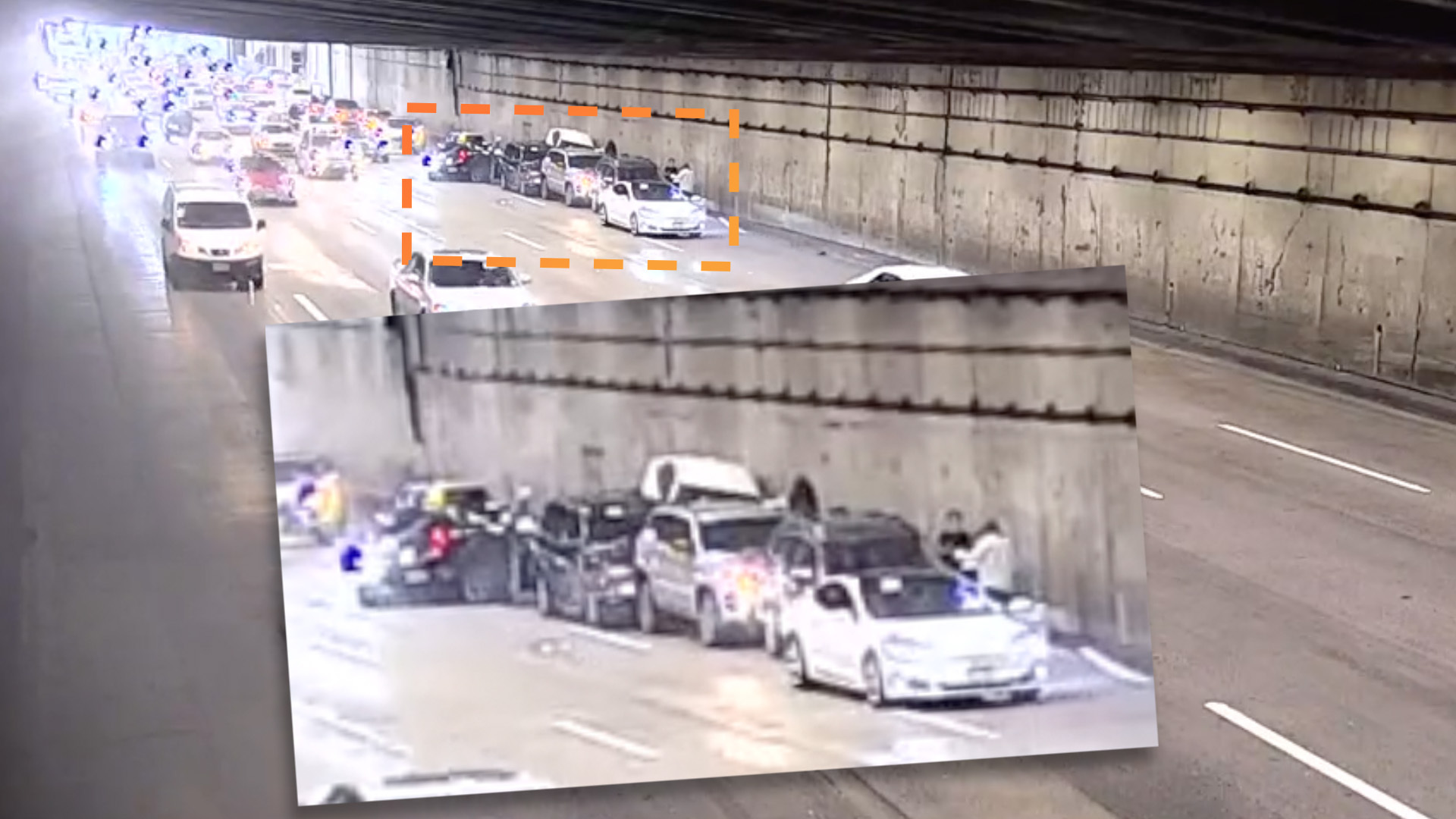

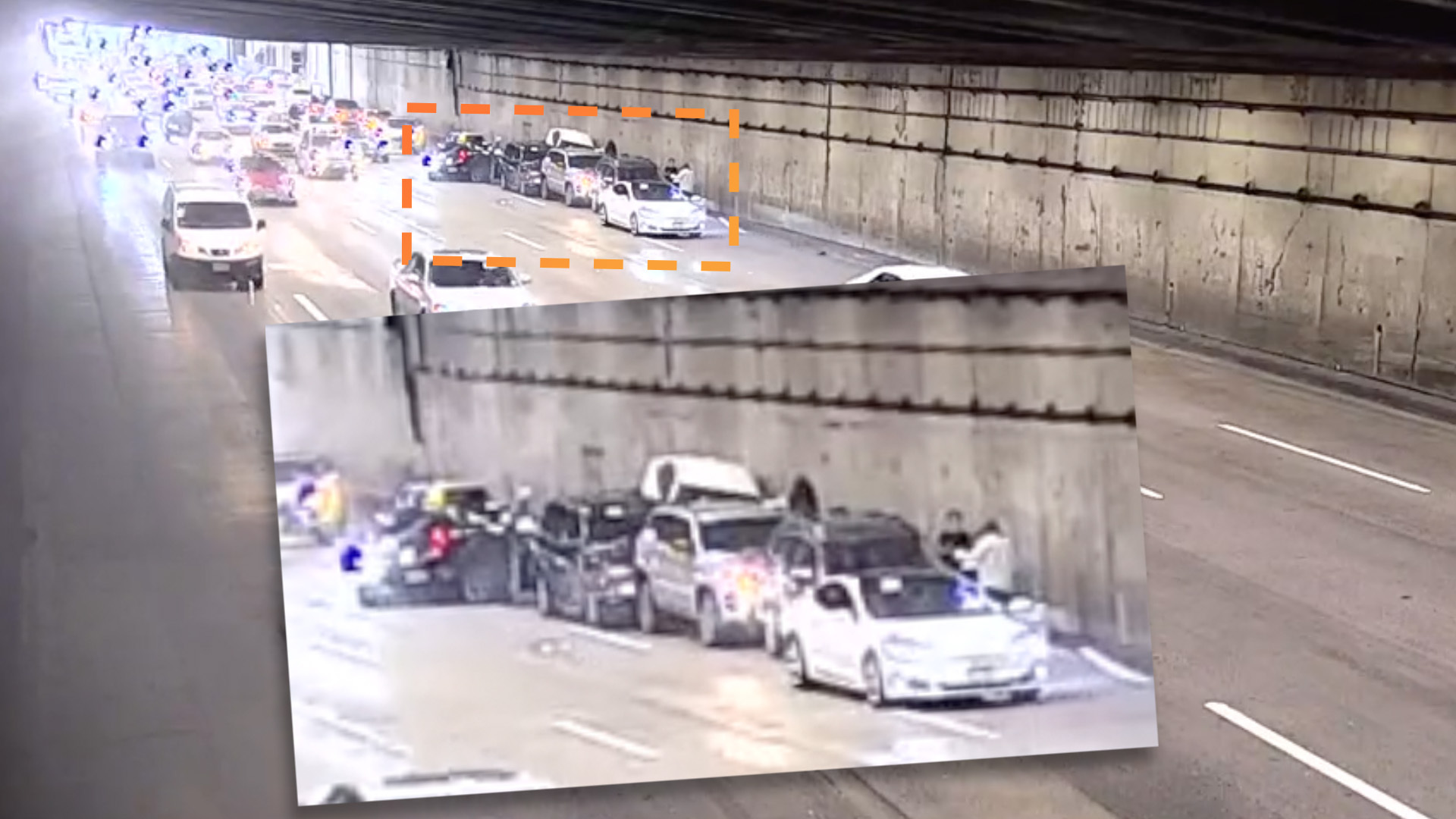

Footage surfaced of a Tesla Model S driver causing an eight-car pileup in San Francisco while using the car’s “Full Self-Driving Beta” function. Ironically, the crash occurred the same day Tesla CEO Elon Musk announced the feature was available to the public rather than a select, vetted subset of customers.

The video obtained by The Intercept depicts a crash that reportedly occurred on the San Francisco Bay Bridge on Nov. 24. It shows a white Model S moving into the far-left lane and slowing to a stop, halting traffic behind it. Its driver reportedly acknowledged they had been using Tesla’s “Full Self-Driving Beta” software. The obstruction caused a crash among eight other cars, resulting in injuries to nine occupants, one of which was a 2-year-old child (though their injuries were minor). Traffic on the bridge was reportedly blocked for more than an hour.

That very morning, Elon Musk declared on Twitter that FSDB would be available to anyone in North America who had purchased the function. FSDB expands the suite of features available through Tesla’s advanced driving assist system (ADAS) “Autopilot,” though according to Tesla it does not escalate the car’s capability beyond SAE Level 2 Autonomy (the same category as Autopilot). Other automakers already have SAE Level 3 systems on the road in limited use.

Previously, access to FSD had been restricted to a customer pool that had both paid up to $15,000 and achieved a high enough safe driving score. (Tesla owners however have criticized the scoring system for rewarding what they consider risky driving.)

Tesla’s automated driving systems—both Autopilot and FSDB—are under investigation by the National Highway Traffic Safety Administration after a string of crashes. The Intercept reported that Autopilot is associated with 273 known crashes between July 2021 and June 2022 according to NHTSA data. Additionally, a dozen collisions with emergency vehicles have brought Autopilot a step away from being recalled.

Regulatory trust in Tesla’s ADAS technology has eroded to the point of California passing a law targeting Tesla’s use of the term “full-self driving.” Because FSDB requires constant driver monitoring according to Tesla, this in the state’s eyes classifies FSD as a driving assist and not a self-driving system. If Tesla is forced to cease calling its top ADAS package “Full Self-Driving,” it could be consequential for the company, whose value Musk has tied to the FSD software’s viability.

“It’s really the difference between Tesla being worth a lot of money or worth basically zero,” Musk has said of Full Self-Driving.

The NHTSA is investigating the incident in the video.

Got a tip or question for the author? You can reach them here: james@thedrive.com