Computer graphics researchers at Purdue University have developed a touchscreen method of controlling drones in order to make the simultaneous taking of pictures easier, the university reports.

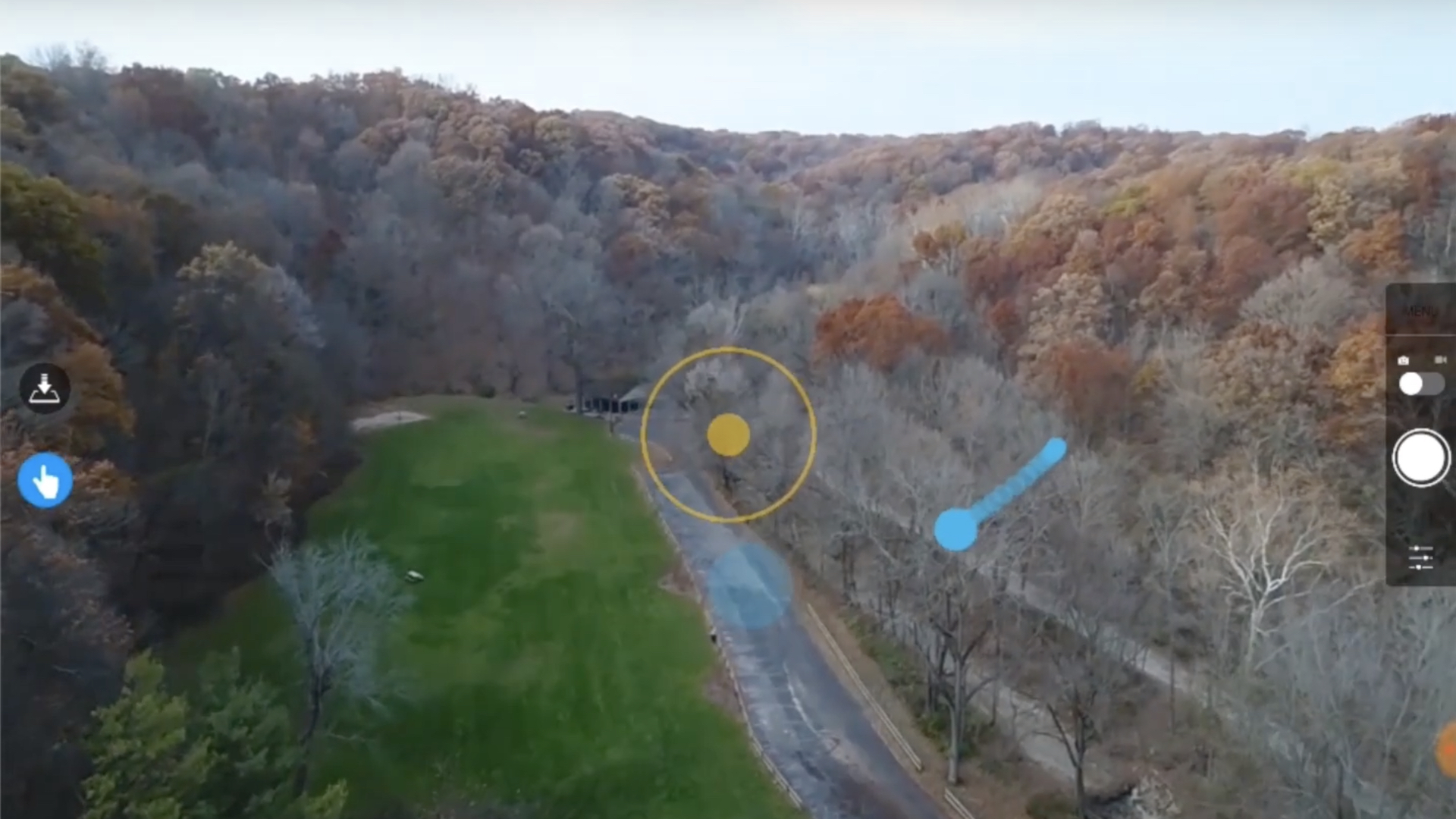

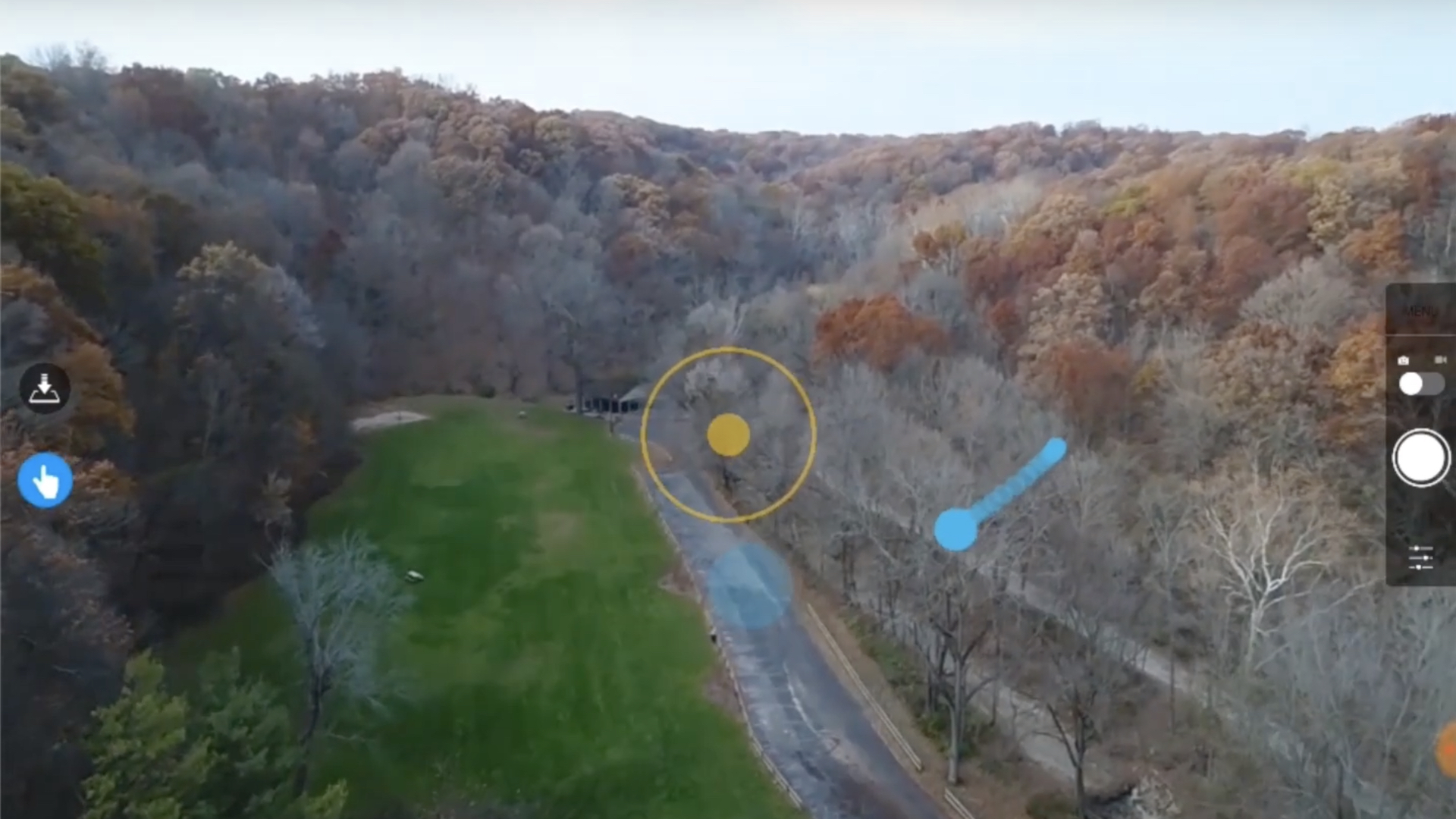

Professor of computer graphics technology Bedrich Benes and doctoral student Hao Kang worked with corporate researchers to build FlyCam, software which essentially combines the drone’s movements with that of its embedded camera. The result: an app that can be used through a tablet or smartphone, aimed to rid users of having to prioritize between safely maneuvering their UAVs and managing to take good photos.

“So the user doesn’t have to think about multiple controls for the drone and the camera,” Benes explained. “He or she can think about the drone as a simple three-dimensional flying camera that is being controlled by simple gestures on a touchscreen device.”

Published in IEEE Robotics and Automation Letters’ October Issue, the research explains that FlyCam can discern between one- or two-finger gestures, and by simply dragging across a device’s screen, the drone can be commanded to accelerate, turn, or capture images. Meanwhile, a double-tap moves the UAV backward, while a single-tap moves it forward.

“We did a user study and most of the users performed with the FlyCam better,” said Kang. “It is easier to use just a single simple mobile device compared to the combination of cumbersome remote controls.”

Benes, Kang, and their team members studied how capable licensed drone pilots actually were in navigating UAVs with traditional controls In order to properly analyze the need for this touchscreen alternative. They then contrasted the results with those of first-time users operating drones through the FlyCam software.

“And the people who have picked up a drone for the first time were equal or better than those who are licensed to fly,” said Benes. “That is what actually impressed us the most.”

These tests were done on phones and tablets running on Android operating systems, with users performing far better on devices with larger screens due to the simple nature of having more freedom of precise finger placement and movement. Benes and Kang intend on continuing their research with automated image capture being a definite part of their continued work.

Ultimately, this is a more practical solution than others we’ve seen, including using facial expressions to command a UAV to fly in a certain direction, focusing on ridding users of unnecessary multitasking and sacrificing one goal (successful navigation) for another (a good photo).

Hopefully, we continue to see innovation in this particular niche of the industry. After all, just because remote controls are the ubiquitous standard, doesn’t mean there’s a better solution out there. Stay tuned.