Even by the standards of modern high technology, automated driving systems are complicated. On the sensor side alone, you have lidar, radar and camera technologies that are tough to fully understand individually and all but overwhelming collectively. Unfortunately, that’s going to get worse before it gets better as a new startup called Outsight has announced an entirely new class of sensor for automated driving that is as technologically complex as it is promising.

Outsight comes out of Dibotics, a firm founded in 2015 around 3D perception data processing and SLAM (simultaneous localization and mapping) technologies that takes place on-chip without requiring machine learning. Now, as Outsight, that technology is being applied not to radar, lidar and cameras but an entirely new sensor type based on something called active hyperspectral imaging. The result is what the company calls a “3D Semantic Camera” which it claims can provide a real-time 3D map with automatic classification based on material identification.

Hyperspectral imaging (or imaging spectroscopy) involves collecting and processing many bands of light intensity data from across the spectrum, rather than just the Red/Green/Blue bands that make up visible light. Because chemical bonds absorb light at specific wavelengths, bouncing certain kinds of light off a material and measuring the reflectance curve allows the imaging of the unique spectral signature of that material. This in turn enables the accurate identification of the specific materials in each pixel of a visible scene, even at long ranges.

Spectroscopy is a critical tool in modern science, and hyperspectral imaging has been used for some time for defense applications including spy satellites and surveillance as well as for oil and gas exploration, mineralogy, astronomy and agriculture. During a phone interview with The Drive, Outsight co-founder and CEO Cedric Hutchings was cagey about the details of this first application of active hyperspectral imaging but said that the development of a low-power, eye-safe “broadband laser” was critical to Outsight’s solution. Though equally cagey on costs, Hutchings said that Outsight would be targeting ADAS applications as well as fully-autonomous vehicles, meaning the cost could reach a point where it could be sold profitably as an option on a consumer car.

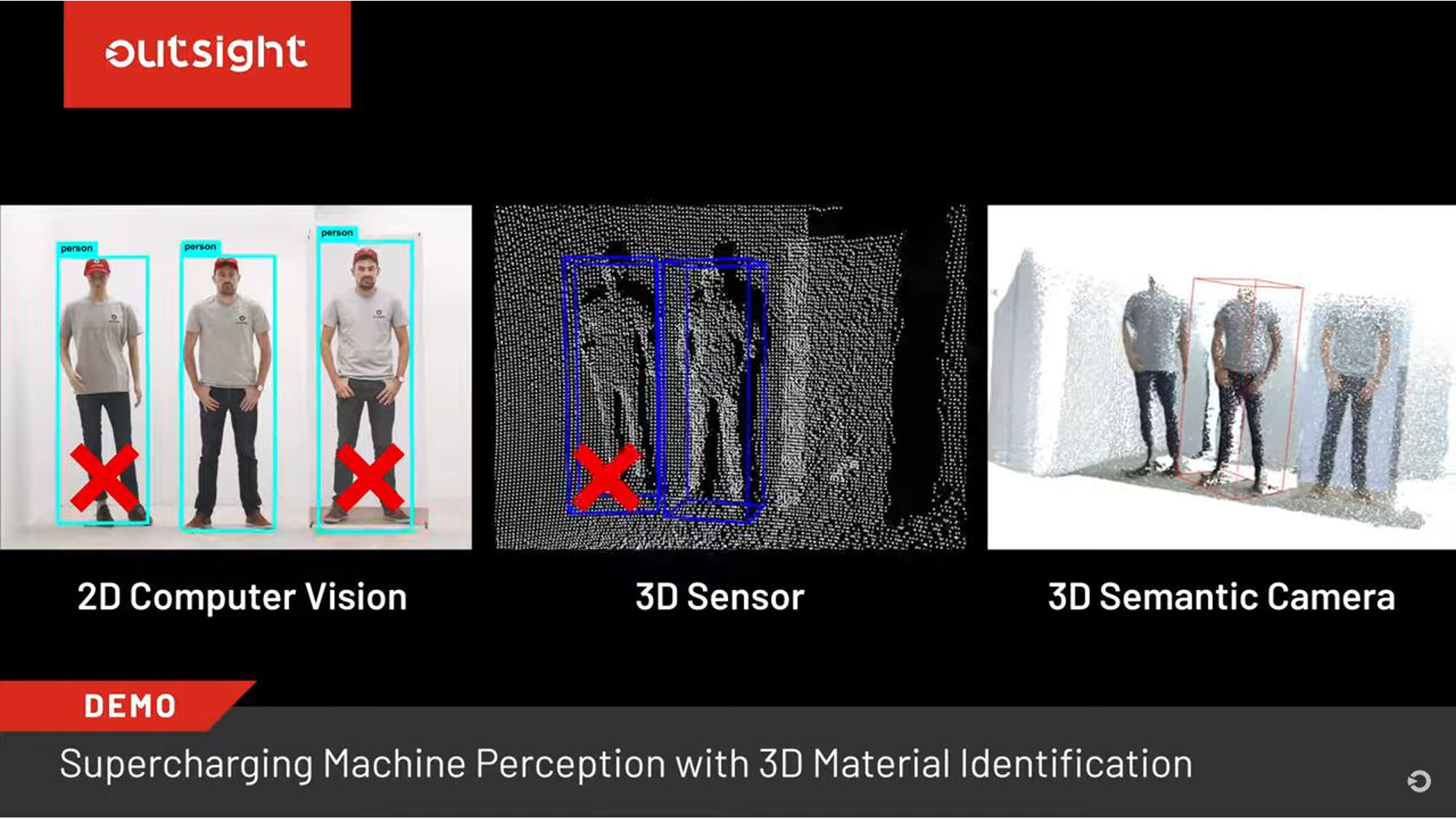

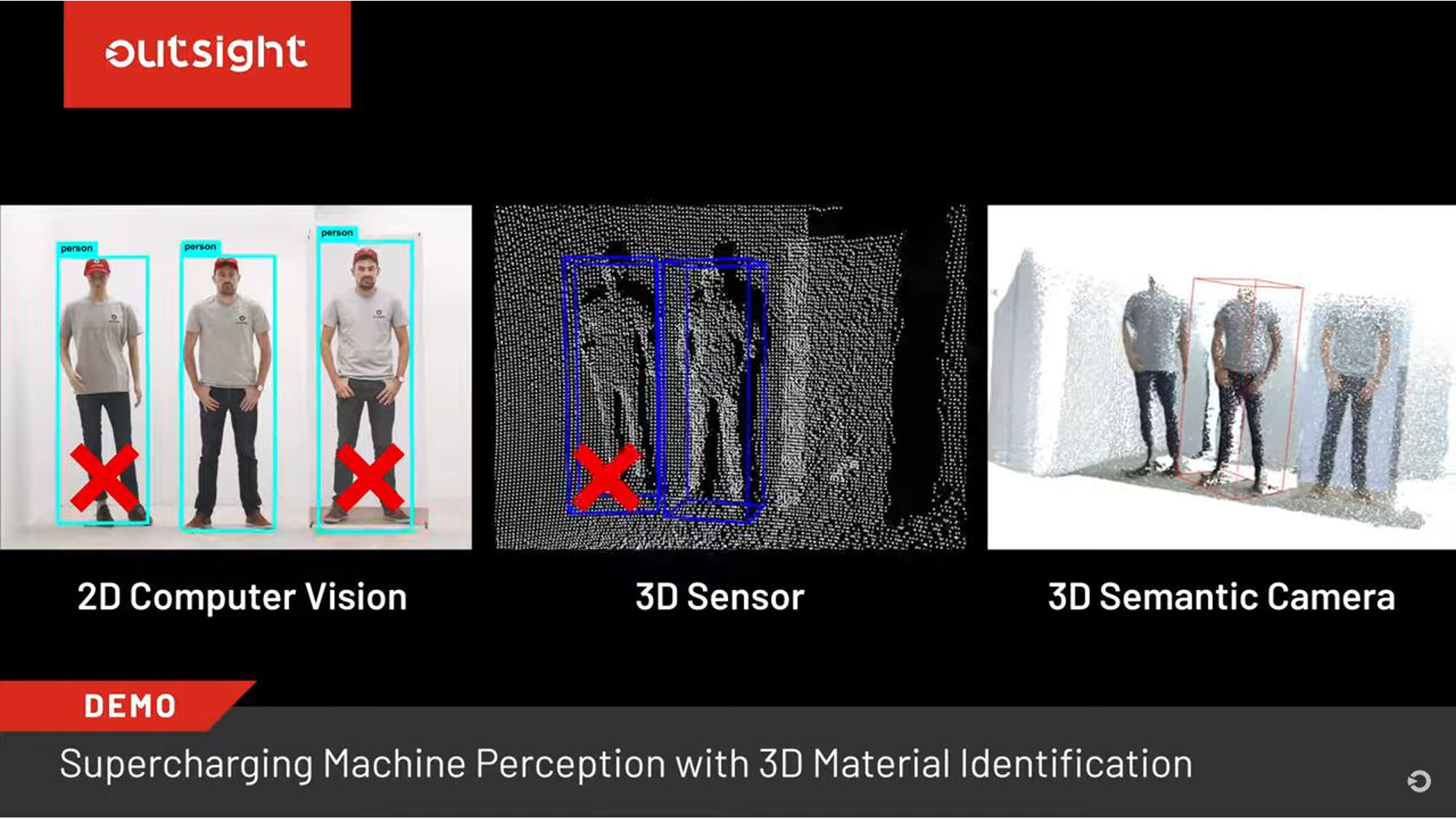

The result is a sensor with remarkable capabilities that seem to fill an important gap in the modern automated driving sensor suite. I’ve written about the potential value of thermal imaging sensors as a tool for the challenging task of identifying and tracking “vulnerable road users” (pedestrians and cyclists) in the wake of the fatal Tempe, AZ crash of an Uber self-driving test vehicle into a pedestrian, and the ability to quickly and precisely classify materials using the 3D Semantic Camera seems like another useful tool to wield against this thorny challenge. Beyond that, the ability to do things like identify things like black ice on a road surface, which can be extremely difficult using cameras (and basically impossible using radar/lidar) has obvious potential both for AVs but also for ADAS and “augmented driving” systems.

It’s also worth highlighting the fact that the 3D Semantic Camera doesn’t rely on machine learning for its classification capabilities, meaning all the black-box uncertainty and potential for bias and holes in training data is avoided. Obviously machine learning has made huge strides and now enables a lot of automated driving capabilities, but it can be a crutch that introduces subtle but serious safety challenges in that it’s so difficult to validate. Since spectroscopy classifies materials based on precise spectral signature readings, rather than machine learning-derived correlations, it should be much easier to have confidence in (for example) the functional safety of a system using this sensor relative to a machine learning-based camera system. Indeed, the potential for using a sensor like this to train and validate machine learning algorithms is quite provocative.

Of course, Outsight is a startup and with only some videos, a press release and a conversation with Hutchings to go on I can’t be certain that its intriguing new sensor will make find a market or even be useful in the real world. They are, however, taking a smart path by partnering with an undisclosed number of automotive OEMs and Tier One suppliers who know how to take a new technology and make it both robust enough for automotive applications and manufacture it affordably at scale. Outsight also seems to be avoiding one of the classic automated driving sensor startup pitfalls by not betting the company on fully autonomous vehicles, and pursuing both ADAS and wholly non-automotive applications like robots for construction and mining as well as aerial drones.

Time will tell whether Outsight will be able to pioneer an automotive market for active hyperspectral imaging sensors, but their potential is easy to understand (at least relative to the technology itself!) and exciting. We will definitely be keeping an eye on this developing space for signs of maturity and adoption.