German regulators have been working on drafting a set of ethical guidelines to dictate how the artificial intelligence in autonomous vehicles will deal with worst-case scenarios. According to Reuters, an expert government committee is writing what will be the basis for software guidelines that will decide the best possible course of action to protect human life above all else in an autonomous car emergency. While this doesn’t sound very exciting and glamorous, the resulting guidelines and software could prove to be the most important (and possibly controversial) aspect of driverless cars.

“The interactions of humans and machines is throwing up new ethical questions in the age of digitalization and self-learning systems,” German transport minister Alexander Dobrindt said. “The ministry’s ethics commission has pioneered the cause and drawn up the world’s first set of guidelines for automated driving.”

The guiding principle of the new German guidelines is that human injury and death should be avoided at all costs. In situations where injury or death will be unavoidable, the car will have to decide which action will result in the smallest number of human casualties. The regulators have also stipulated that the car may not take the “age, sex or physical condition of any people involved” into account when choosing what to do. Instead, self-driving cars in germany will prioritize hitting property or animals over humans in the event of a worst-case scenario.

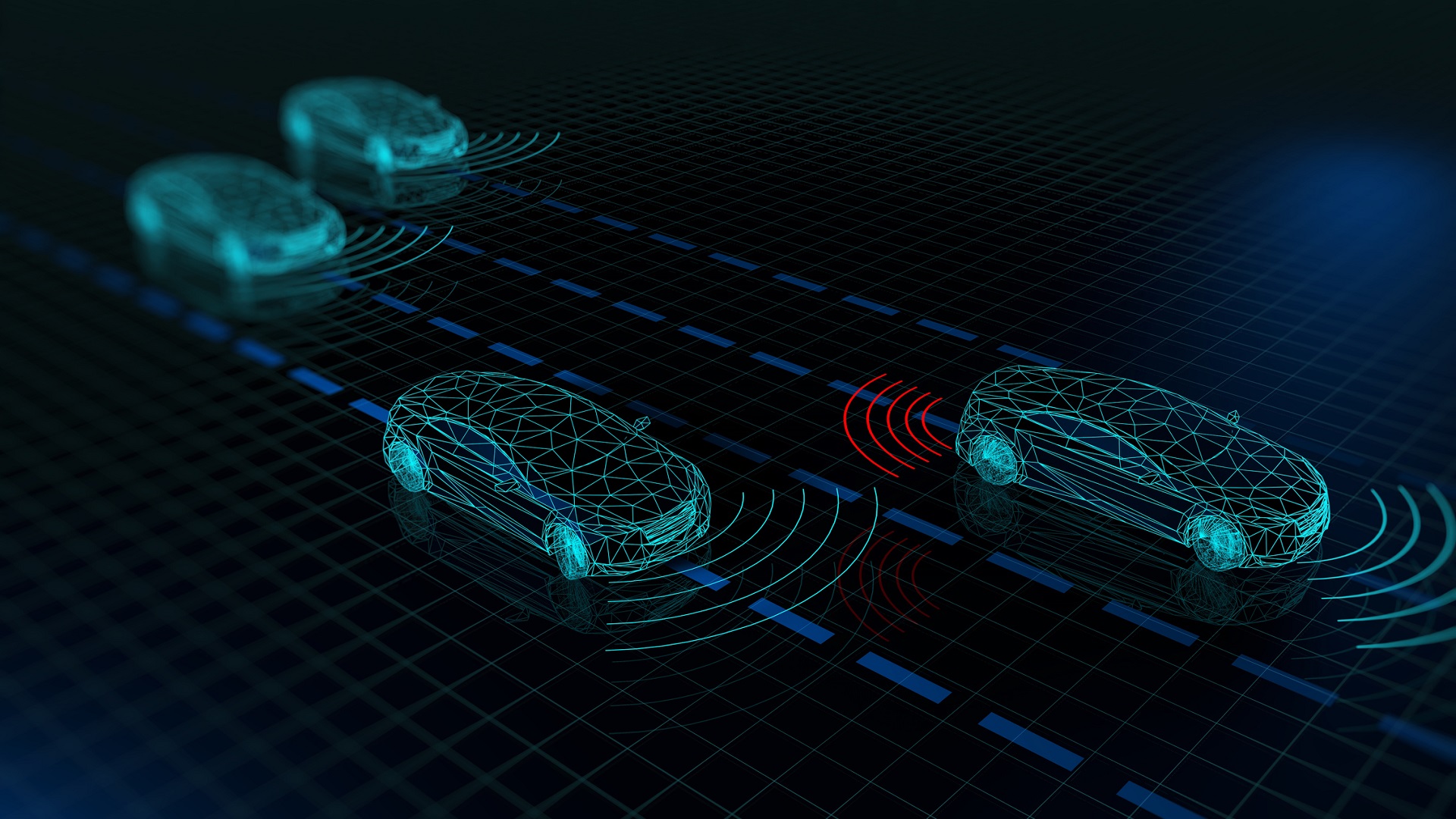

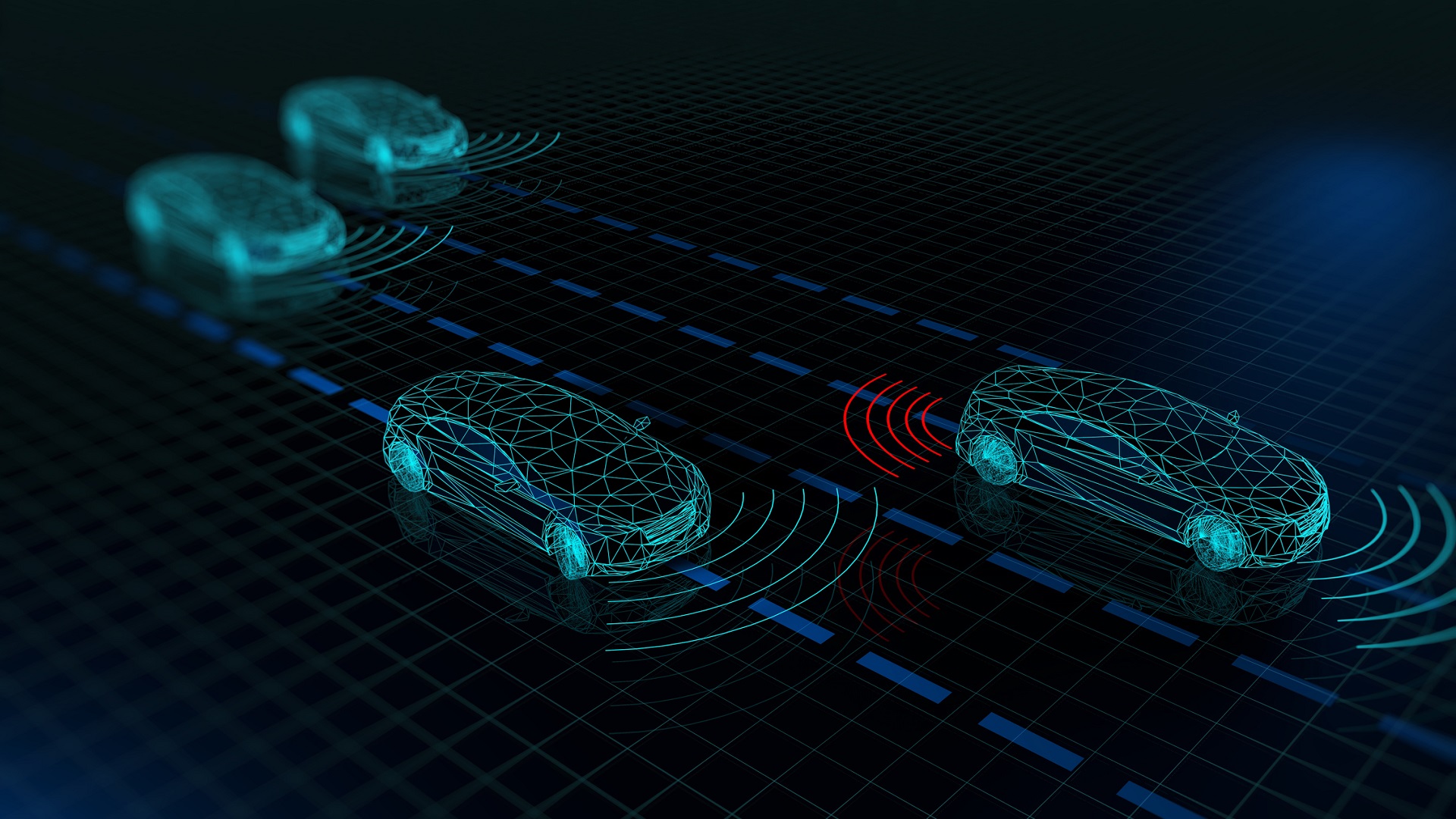

As artificial intelligence capabilities increase, what we will ask of autonomous vehicles will become extremely nuanced and borderline frightening. Currently, in cars with driver-assist modes, there are a few different procedures in place for emergency situations. They vary slightly from automaker to automaker, but these are the basics of what happens: The car is programmed to first alert the human to take control; if the human does not take over, or if there is no time for that, the car is programmed to stop. This typically happens either by pulling over on the side of the road or by slamming on the brakes, depending on the situation. The car isn’t making any real decisions; it’s simply programmed to do its best to not hit anything.

Of course, pulling over or slamming on the brakes is not always possible, or ideal. There are many situations where accelerating or crossing the center line is the better choice. For the most part, your car doesn’t know that—yet. The AI technology to make those type of decisions isn’t quite there on the commercial/consumer side of things. But it is coming.