In my last column, I laid out why the language of self-driving cars is broken, and why I think the SAE Automation Levels need to be replaced. Short version: they are conceptually vague yet technologically restrictive, and people are dying as a result. That the SAE Levels were created by and for engineers is irrelevant; the media cite them, manufacturers use them, investors think in terms of them, marketers manipulate them. And end users suffer as a result.

What’s the first step? Clarity. Language must answer itself accurately—a word or phrase shouldn’t raise more questions than it ultimately answers—not only on a technical level but a cultural one. Confusion introduces risk in every situation, but when you’re talking about cars those risks become increasingly severe, and human beings have already died from misunderstanding what even semi-automation means.

Even Tesla insists this is true. When Tesla chalks up an Autopilot-related crash/accident/incident to “driver error,” the company is essentially saying, “Our product worked as intended; it was the operator who misused it.”

Clarity, then, becomes a moral imperative.

Here are the words and phrases currently lacking clarity, and some suggestions for how to work back towards a solution.

Self-Driving: This should only describe fully autonomous cars, as the phrase itself suggests—the top level phrase for a truly self-driving car that does everything you think a “self-driving” car does. Unfortunately, it’s continuously used to describe cars limited to far less. Tesla Autopilot is the best example. Autopilot can drive itself for limited periods of time, but only if there’s a human ready to take over anytime. That doesn’t make it self-driving; at best, it makes it semi- or partly self-driving, which are too vague to be helpful, and effectively meaningless. If “self-driving” is not limited to its strictest definition—a car that can drive itself in all the ways a human can drive it—it doesn’t mean anything at all.

Driverless: This term overlaps somewhat with self-driving and is equally vague: it currently encompasses everything from a car without a steering wheel or pedals, to a vehicle with self-driving capability carrying only passengers, to no one in a vehicle at all, to riders being chauffeured by remote control, à

la

Phantom Auto or Starsky Robotics—in which case a driver remains in the equation, just not in the vehicle.

Automated/Automation: Basically, a repetitive task performed by a machine. We’ve had automation for hundreds of years. ABS is a form of automation. So are windshield wipers. All cars today are partially automated, but none can be called fully automated until no human is required to perform any task anywhere. For example, an automatic transmission can select the correct forward gear, but still needs a human to put the car in “Drive,” “Park,” “Neutral,” etc.—the transmission can’t start doing its job until a choice is made by a human. If a human is necessary for core operation, that is only ever a highly-automated machine. When a human is no longer necessary, you’ve moved to the next stage: Autonomy.

Autonomy/Autonomous: Autonomy is defined as freedom of thought and action, even in the absence of complete information. Autonomous vehicles are theoretically possible, but none currently exist, or are close to existing. Even Waymo’s state-of-the-art vehicles are limited to a clearly defined location called a “domain.” For a Waymo vehicle operating only in its domain, unless it can function fully, without a human being, 100 percent of the time, including weather, it is not an autonomous vehicle. The term can only be applied if the machine meets or exceeds a human decision-making standard. Not in terms of quality—which may never be possible—but quantity. This is the strictest possible standard, as it needs to be.

Robocars: The “robo-” prefix is nothing more than another way of suggesting automation, with all the same drawbacks. Anything with any level of level of automation is robotic. Too vague to be useful.

Semi-autonomous: Many (including myself) have used “semi-autonomous” in an effort to avoid the all-or-nothing implications of “self-driving,” “driverless,” “automated” and “autonomous,” but the “semi-” prefix is nothing more than a band-aid. It doesn’t fix the core issues.

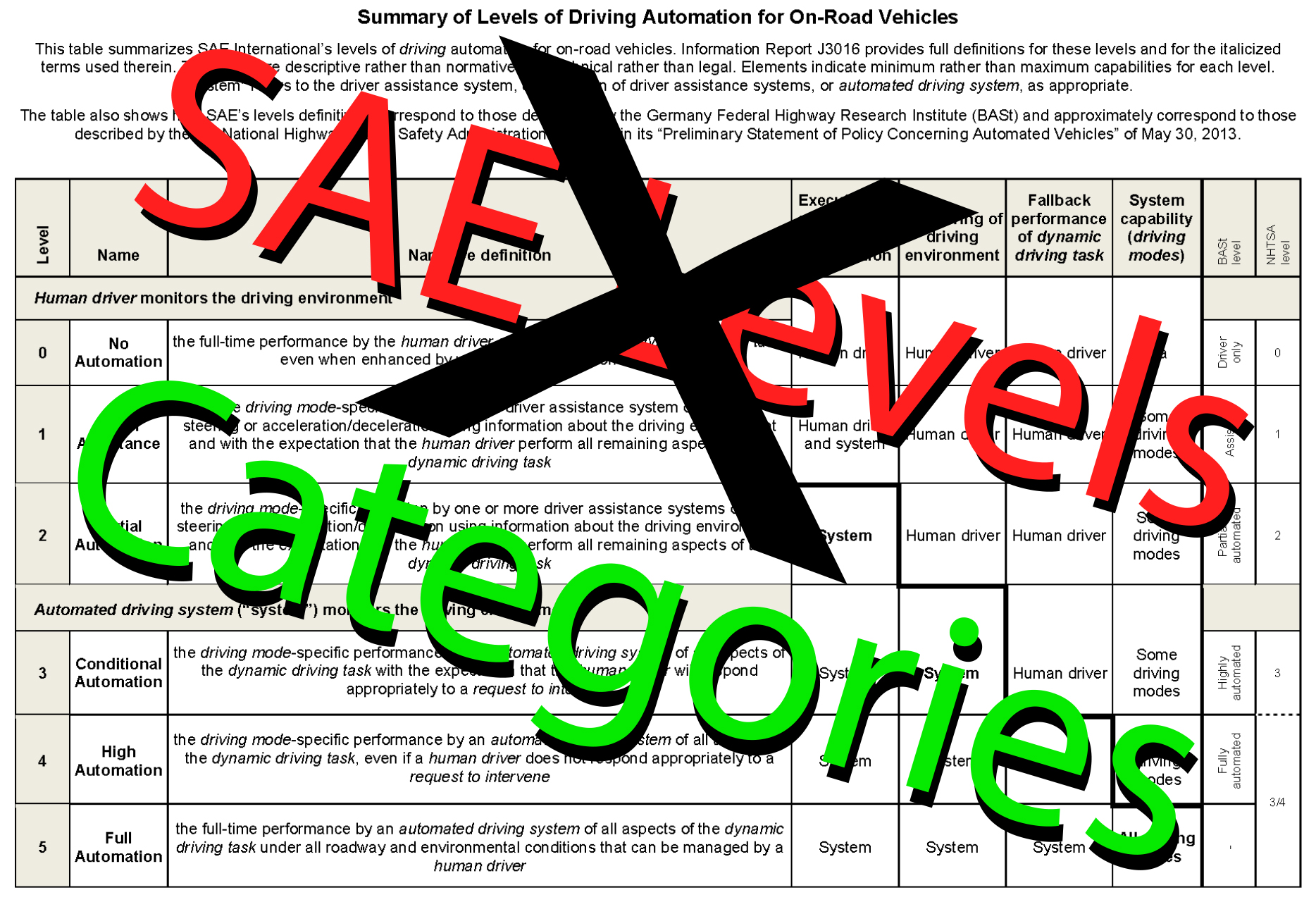

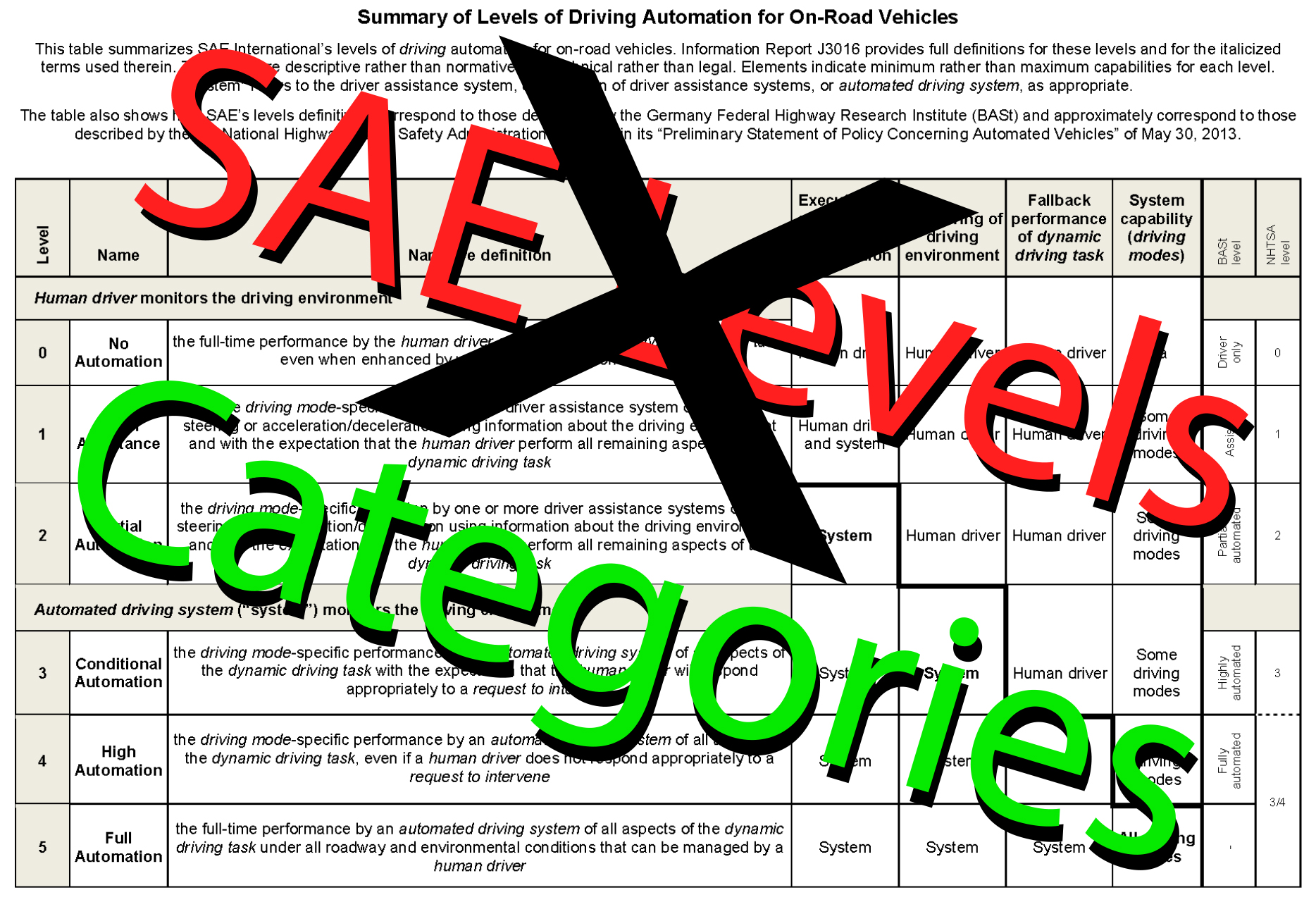

Let’s go back to the current SAE chart on the NHTSA website:

The only mention of “autonomous” is in the L0 language: the definition states that level has “[z]ero autonomy.” This suggests that not only that autonomy starts at L1, which is misleading, but also that there are different degrees of autonomy. (That may someday prove true, i.e. two different autonomous systems come up with different paths around the same obstacle, but we’re not there yet.) For now, autonomy is totally binary: vehicles are either autonomous, or they’re not. Autonomy by that definition only exists as SAE L5, which they call “Full Automation.” To call anything short of L5 “semi-autonomous” is to mistake a high level of automation as autonomy, which is dangerous.

Semi-Automated

This applies to any automation between L1 and 4, which makes it great for differentiating itself from autonomy but lousy for describing specific functionalities. Adding loose synonyms like “partial” or descriptors like “conditional” doesn’t help, because L3 is also partial, L2 is also conditional, and every manufacturer’s semi-automated system operates under different conditions. Those sub-definitions? They make no room for automation types that doesn’t fit, like aviation-type parallel systems and teleoperation.

Advanced Driver Assistance Systems (ADAS)

We may not see ADAS on the chart, but it’s fair to say that advanced driver assistance systems, made up of technologies like radar cruise control, automatic emergency braking (AEB), and lane keeping (LKAS), are the only systems currently on the road, and are often conflated with L2. All ADAS suites are not equal, however, and high-functioning examples like Tesla Autopilot and Cadillac SuperCruise are often confused with L3 due to advanced functionality, even though both are technically L2, and are frequently portrayed in the media as L4. That’s not good.

How to Replace SAE Automation Levels:

As futurist Brad Templeton points out, defining automation by degrees of human input is the root flaw. Any system that requires human input at any level is only as effective, or safe, as the user. Any language around a system that fails to clarify when human input is necessary is dangerous.

By that logic, there are only two types of automation:

- Any level of human input

- Zero human input

No one in their right mind believes zero-human-input vehicles will achieve 100 percent ubiquity anywhere for decades. The corollary is that Waymo will likely be deploying zero-human-input vehicles in very limited parts of Arizona later this year.

Vehicles that require human input will function virtually anywhere, or at least anywhere humans are willing to risk them. Zero-human input vehicles? They’re geographically limited to domains with optimal conditions, and will be for decades.

The framing device should therefore not be human input, but location. The language used to describe those systems must make this distinction clear.

Let’s Ditch Levels, Not Replace Them

My proposal for the simplest possible system for defining automation in vehicles: there are no levels. There are only categories, and there are two of them:

- Geotonomous/Geotonomy

- Human-Assisted Systems (HAS)

These are not levels; these are functionalities. A vehicle may possess one, or both. Let’s define them, and talk a little bit about their interrelationship.

GEOTONOMOUS

Geotonomy is autonomy limited by location. It replaces self-driving, autonomous, driverless and robo-anything, using a new word with a restrictive prefix that forces the question: Where does it work?

Remember the early days of cellphones, when you needed a map to know where yours worked? Geotonomy would require it, disclosure would be mandated by law, and the system provider would assumes 100 percent liability. In commercial fleets like Waymo, Uber, Lyft, & Didi, geotonomy would be apparent in the corresponding app. As its domain grows, geotonomy grows until it becomes functionally synonymous with autonomy. It may never happen, but at least we have a goal.

If a vehicle has a steering wheel for use inside or outside its geotonomous domain, it would require a system for transitioning to human control that meets a regulatory safety standard. Unless or until transitions could be implemented safely (and let’s not get into the fact that no one agrees what “safety” is) geotonomy would not be able to be deployed in vehicles with steering wheels.

Goodbye, L3.

Check out the L3 NHTSA/SAE definition:

“The vehicle can itself perform all aspects of the driving task under some circumstances. In those circumstances, the human driver must be ready to take back control at any time when the ADS requests the human driver to do so. In all other circumstances, the human driver performs the driving task.”

The L3 definition lacks language about safe transition, which means vehicles that only meet the definition cannot be safely deployed, and should be banned. There’s a reason Waymo and many car makers skipped this. As Templeton points out, L3 is a dead end anyway.

Human-Assistance Systems

Human-Assistance Systems (HAS) are anything that isn’t geotonomous. It’s a hybrid of human and ADAS, with a clear focus on the human. It isn’t a sexy name, and it shouldn’t be. If a human is necessary, the category has to have the word “human” in it, and human deprives anyone of confusion over automation, autonomy, or geotonomy. With HAS, the human is 100 percent responsible at all times.

Why not call it ADAS? It’s 25 percent longer, and “driver” is the second word—”advanced” is the first. Also, ADAS is so inconsistent that safety cannot be assumed.

HAS has no levels. Since any system requiring human input is only as safe as the user, then no HAS system can be ranked by safety. Individual functionalities, like automatic emergency braking, can be ranked that way, but until there’s statistical evidence for anything else, no hierarchy works except for degrees of convenience.

I propose a restaurant-style HAS convenience rating system. NYC uses letter grades, so let’s run with that. Based on my recent Cadillac SuperCruise v Tesla Autopilot comparo, the Caddy gets a “B” for convenience, and the Tesla gets a B-minus. (Don’t be annoyed, Tesla fans: everything else I’ve tested gets a C, or worse.)

Of course, letter ratings for HAS systems don’t tell the full story of the individual sub-functionalities, but better they should all be in thrown in the soup of convenience than stand on the counter of safety.

Or until someone has a better idea.

A couple thoughts for the road:

What about dual-mode vehicles?

What happens when HAS-enabled vehicles get geotonomy as an option? The best of both worlds—as long as there’s a safe transition system. That deserves its own article. Or book.

What About Grey Areas?

There are tons of grey areas, but they all fall under HAS. Teleoperation? HAS. Parallel systems? HAS. They have to fall under HAS, so as to avoid anyone mistaking them for possessing any autonomy. Let the market decide which convenience features they want. It’s important for HAS to remain vague enough that technologies as-yet uninvented have a place to live.

What about HAS branding that uses the words “auto” and/or “pilot”? A lot of people aren’t going to like what I have to say about that, but that’s also another story.

Questions? Comments? Better ideas? Let’s hear them!

Alex Roy — Founder of the Human Driving Association, Editor-at-Large at The Drive, Host of The Autonocast, co-host of /DRIVE on NBC Sports and author of The Driver — has set numerous endurance driving records, including the infamous Cannonball Run record. You can follow him on Facebook, Twitter and Instagram.